Today, Microservice Architecture has become increasingly popular in companies around the globe. A few key factors where Microservices come into play are in keeping software components well organized, providing a faster go-to-market, high resilience, and more control over scalability. While we enjoy all of these benefits and more, we also face a set of challenges.

Table Of Content

Challenges in Microservices

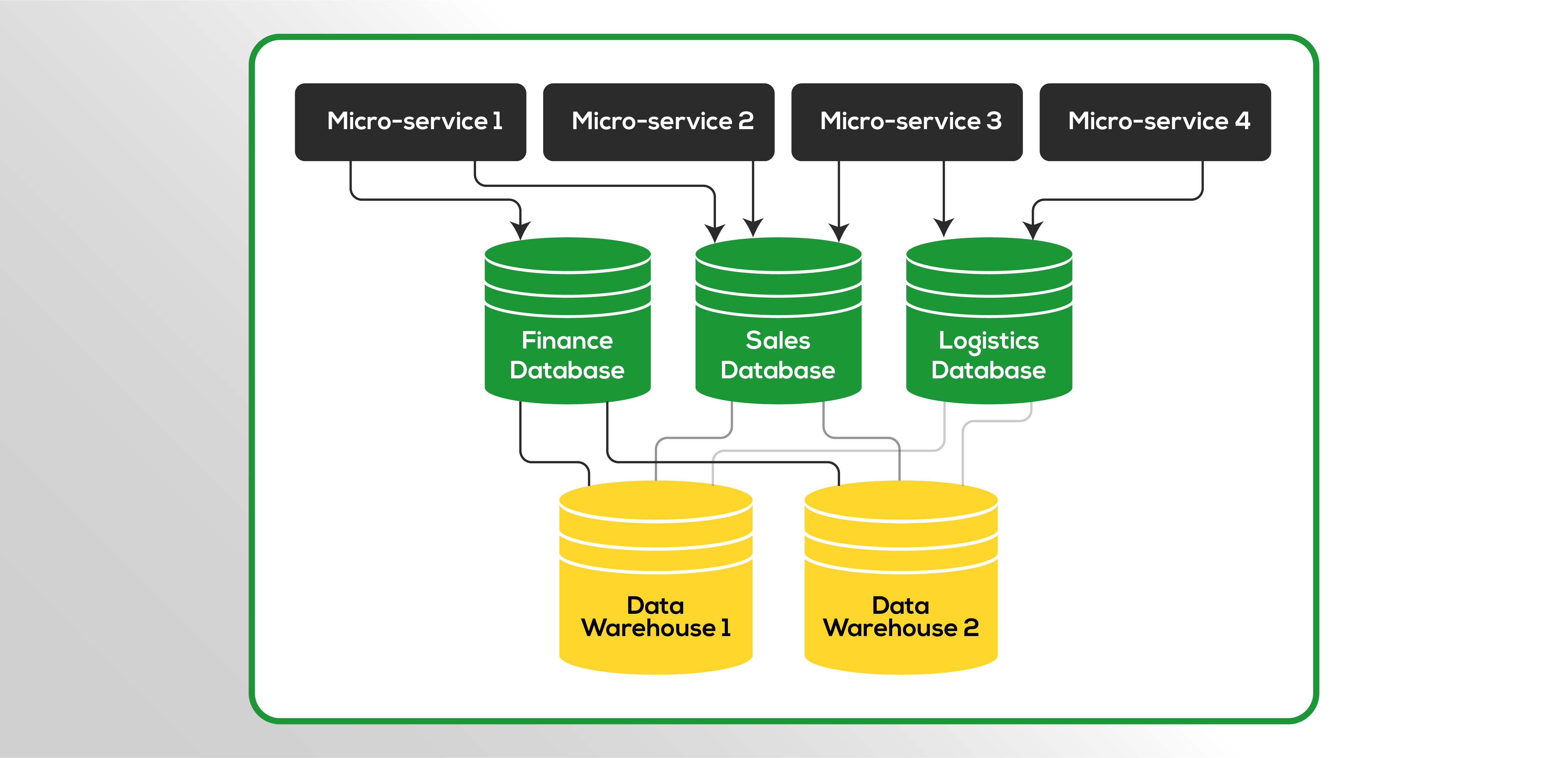

Each Microservice is connected to a database dedicated to that service or uses a database shared by multiple other services. While data gets distributed across different databases, it does not provide a way to combine the data from different business functions. This creates a huge gap in providing data visibility to business operations.

Complex businesses like Ninjacart’s Supply Chain deal with a huge web of transactions involving data from various operational units (like logistics, procurement, etc.). If business operations are completely driven by data analytics, there should be a way for business leads to view the consolidated data in a dashboard, where parts of the data are stored in different databases.

Data Lake as a solution

As a solution, we created a Data Lake. All the databases are replicated into the Data lake using Real-time replication channels. For each database, there will be a Master instance that holds the latest copy of the data, which is also responsible for changing the data. MySQL provides a way to replicate the changes happening on the Database Master instance in real-time. Each change to the data will produce a new entry in the binlog. Using this Binlog, we can replicate the changes to any other Database instance via Mysql Binlog replication. We use this mechanism to bring all the data together into a data lake, where the Database queries can utilize data stored in multiple databases, hence providing a way to connect the data between multiple Business functions.

Below is a depiction of Replication channels across the database systems.

Data Lake can be sized and scaled accordingly (Disk Space, Network, CPU, Memory) to make sure the systems can handle the Analytical workload along with Operational workload while also working with optimal performance and coping up with increasing data requirements. Once you analyze the database system performance using several metrics like Average/Max query execution time, CPU Utilization, and Memory usage, you may consider scaling out with a new instance and having multiple such Data Lakes running parallelly. This will improve the Query performance, latency and also provide high availability. We utilize ProxySQL as a load balancer to relay the SQL connections and Distribute the load across multiple database systems, similar to how Nginx/apache handles HTTP connections.

The above picture depicts how Load Balancer is used to distribute the Operational workload across different Database servers.

Since there are multiple replication channels, we ensured that Alerting and Monitoring were in place to continuously track the Replication Status, and Replication Delay using PMM tool + Grafana. These tools help us to fetch all the essential Database metrics, and we use these metrics to send emails and phone alerts and notify the DBAs to take quick action and provide a resolution.

Scale your Data Volumes to Infinity

We have talked about having Real-time replication needs for Operational workload, where the business needs live data to track real-time progress on the field. Now, we will understand the Analytics workload. Let’s consider an instance where our Business heads want to look at how today’s business is doing in comparison to the previous days of this month or do a data comparison of the current month with the previous month. Such comparisons are memory and compute-intensive since we would be operating on huge amounts of data. We separate such cases as Analytics workload and consider it as a different problem than the operational workload. Instead of doing such heavy computations on every request, we optimize it by pre-computing the data once and using it multiple times.

With a growing business, Data volumes and Data variance also grow in proportion. If the system cannot scale, we might have to take a decision on either throwing away historic data, or partitioning the data into two systems, or only storing the data in compressed format.

Such big data problems are fairly common and now have easier solutions available. Especially when the prices of Data storage and maintenance have dropped significantly, it is a good bet to take on solving the problem. We should be able to provide easy access to historic data, help the business to mine key insights, and also compare the current trend with past trends.

We used Cloud solutions available in the market that are mainly focusing on providing the ability to store huge data volumes and cracking the performance challenges on analyzing huge data with a decent performance. Data warehouse clouds like Snowflake and Amazon Redshift helped us bring efficiency in dealing with such problems.

With huge volumes of data, batching multiple records helps increase the speed of data replication to the data warehouse cloud. For Analytics workload, we should be able to compromise on the freshness of data to some extent to cope up with the increase in data volume.

We designed a workflow on how the data moves across the systems. There could be multiple steps required to be performed as part of this Replication.

This is what a typical workflow may look like —

Considering the system may contain frequent changes to the Data and Schema, we have to also have better visibility on the task status of the Data pipeline and a robust monitoring system that can identify the failures and report the issues for maintenance.

In this regard, Apache Airflow helps us to build and manage the Workflows required to move the data across systems.

Talking about the performance on Data warehouse clouds, you may expect 2-3X efficiency in terms of Query performance in comparison to the RDBMS systems. This gain in performance is given out of the box, without any transformations/aggregations/data modelling done on the Data.

Bottom line is, we make sure our Data platform provides quick access to any kind of Data requirements from the business, and still be able to keep the Architecture simple, robust and scalable.

. . .

Written by

Sumanth Mamidi

Technical Manager

Tech Team – Ninjacart

No Comment! Be the first one.