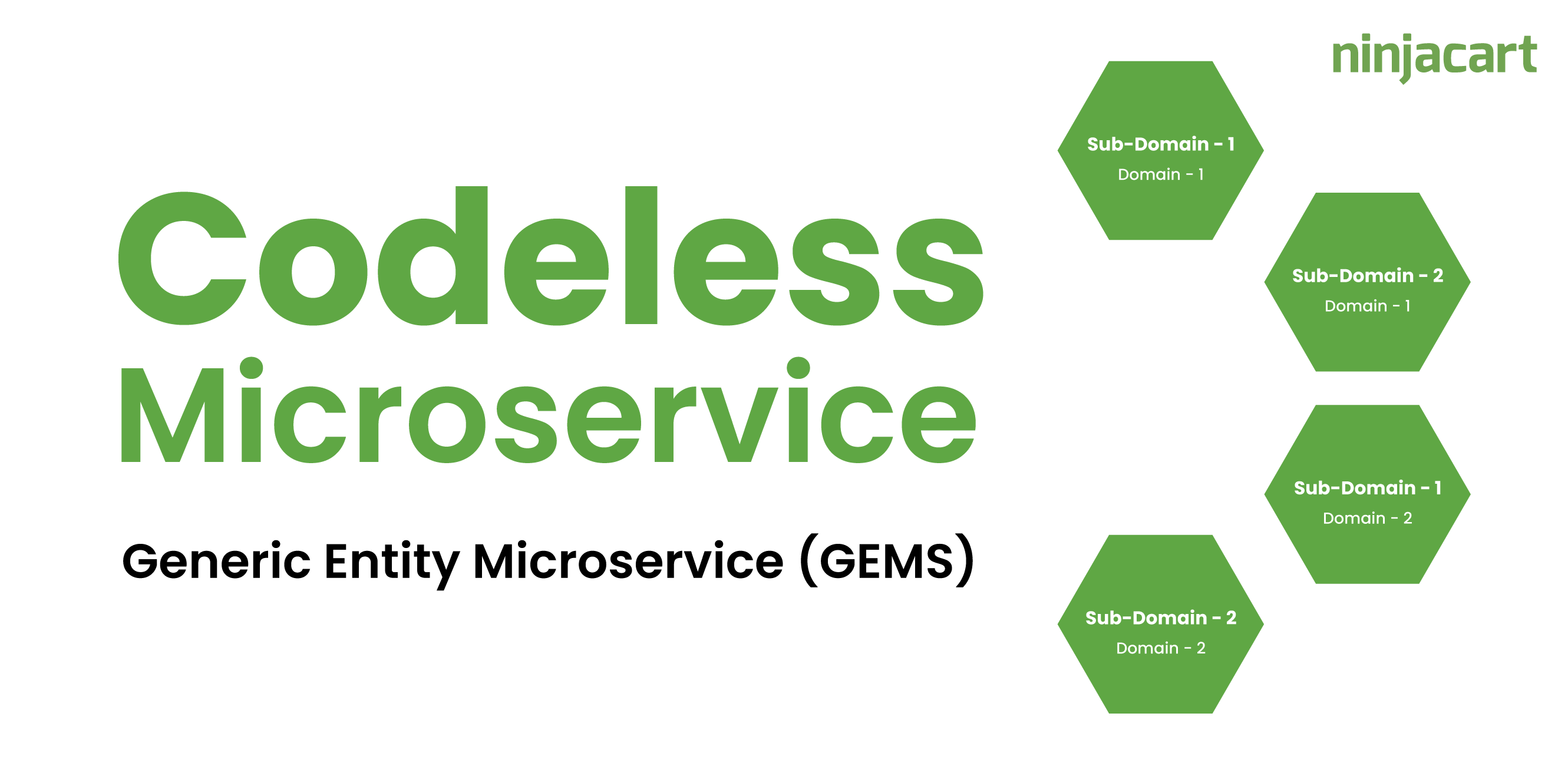

The Problem Statement

In Ninjacart, the requirement for developing API for multiple domains comes up quite often. Traditionally, for developing an API we design the Data Model, define Interface, and service implementation for a domain entity. So for a given entity, supporting all SCRUD API developers used to take around 2 to 3 days.

Table Of Content

In case we have to support N entities, a lot of time was spent on writing the same boilerplate code again and again. In short, below are the problems we wanted to solve:

- How to get the developer to focus more on Entity Design?

- How to reduce the time to write the same SCRUP API?

The Solution

As discussed in the above section, to solve the problem, we thought of using the schema-on-read concept. In schema-on-read, data is applied to a schema as it is pulled out of a stored location, rather than as it goes in.

Below are the focus points to keep in mind while using the schema-on-read concept

- We need to have a schema store

- We should have the ability to validate the schema structure

- Once the schema is created in the store, the API should be readily available for consumption for that schema

- Support for Schema Evolution

- Strictly abide by the rule of the Microservice design principle – Loosely coupled with the external system using Hexagonal Design Pattern

- Support for Audit

- Support for Multiple Data Sources

Core Component

The core component of this system is the Schema Store. We decided to go ahead with https://www.apicur.io/ since they support multiple formats of a schema like json, JSON-LD, XML, Avro, graphql etc. By default, they support schema versioning as well. They provide client SDK to read and update schema as well based on versions.

The data store we started supporting is Mongo DB and for search, we use elastic search. For Audit, we want to use an event-based architecture.

Below is the architecture for our Generic Entity Service.

In the above architecture, the developer just focuses on Data Model Design and uploads the schema in the JSON-LD format in apicur.io. The core 6 API which is BSCRUD (Bulk, Search, Create, Read, Update and Delete) are written in Spring boot and deployed.

The responsibility of the clients invoking the above APIs is to pass the entity type in the path param to use BSCRUP API after the model is deployed. At run-time, based on the entity type in the path param, we load the schema and validate the schema against the given JSON. Any schema validation error API won’t be succeeded. Below is the sample API Signature:

/api/v1/{entityType}

The entity type is the name of the entity we uploaded to the schema store

The search is supported via Elastic Search and the rest of the CREATE and READ API is on top of Mongo DB.

Once the record persists in Mongo DB, an async request is passed to persist the record in Elastic Search as well as generate an event in Azure Event Hub for Audit. All our services are deployed on Azure.

What’s Next?

- Once the schema is uploaded to apicur.io, we would like to generate SDL (Schema Definition Language) automatically.

- This will enable us to introduce authorization in our generic entity services with row-level filtering as well as column-level filtering.

Conclusion

With the above architecture, do we not need to write API at all hereafter?

The answer is No.

This architecture will solve the following problems:

- For the APIs where no Business logic is involved or more static data, this architecture is well suited.

- Reduce development time by avoiding unnecessary boilerplate code on our services

- The developer could focus only on business logic APIs alone

With this approach in our Ninjacart, we were able to fast-track all our releases well ahead of time.

Written by

Karthikeyan Karunanithi

Architect – 1.2

Software Development

11

No Comment! Be the first one.